Self-Host

Learn about how to self-host thynk on your own machine.

Benefits to self-hosting:

- Privacy: Your data will never have to leave your private network. You can even use thynk without an internet connection if deployed on your personal computer.

- Customization: You can customize thynk to your liking, from models, to host URL, to feature enablement.

Setup thynk

These are the general setup instructions for self-hosted thynk. You can install the thynk server using either Docker or Pip.

To use the offline chat model with your GPU, we recommend using the Docker setup with Ollama . You can also use the local thynk setup via the Python package directly.

Restart your thynk server after the first run to ensure all settings are applied correctly.

- Docker

- Pip

- MacOS

- Windows

- Linux

Prerequisites

Docker

-

Option 1: Click here to install Docker Desktop. Make sure you also install the Docker Compose tool.

-

Option 2: Use Homebrew to install Docker and Docker Compose.

brew install --cask docker

brew install docker-compose

Setup

- Download the thynk docker-compose.yml file from Github

mkdir ~/.thynk && cd ~/.thynk

wget https://raw.githubusercontent.com/khoj-ai/khoj/master/docker-compose.yml - Configure the environment variables in the

docker-compose.yml- Set

thynk_ADMIN_PASSWORD,thynk_DJANGO_SECRET_KEY(and optionally thethynk_ADMIN_EMAIL) to something secure. This allows you to customize thynk later via the admin panel. - Set

OPENAI_API_KEY,ANTHROPIC_API_KEY, orGEMINI_API_KEYto your API key if you want to use OpenAI, Anthropic or Gemini commercial chat models respectively. - Uncomment

OPENAI_API_BASEto use Ollama running on your host machine. Or set it to the URL of your OpenAI compatible API like vLLM or LMStudio.

- Set

- Start thynk by running the following command in the same directory as your docker-compose.yml file.

cd ~/.thynk

docker-compose up

Prerequisites

- Install WSL2 and restart your machine

# Run in PowerShell

wsl --install - Install Docker Desktop with WSL2 backend (default)

Setup

- Download the thynk docker-compose.yml file from Github

# Windows users should use their WSL2 terminal to run these commands

mkdir ~/.thynk && cd ~/.thynk

wget https://raw.githubusercontent.com/khoj-ai/khoj/master/docker-compose.yml - Configure the environment variables in the

docker-compose.yml- Set

thynk_ADMIN_PASSWORD,thynk_DJANGO_SECRET_KEY(and optionally thethynk_ADMIN_EMAIL) to something secure. This allows you to customize thynk later via the admin panel. - Set

OPENAI_API_KEY,ANTHROPIC_API_KEY, orGEMINI_API_KEYto your API key if you want to use OpenAI, Anthropic or Gemini commercial chat models respectively. - Uncomment

OPENAI_API_BASEto use Ollama running on your host machine. Or set it to the URL of your OpenAI compatible API like vLLM or LMStudio.

- Set

- Start thynk by running the following command in the same directory as your docker-compose.yml file.

# Windows users should use their WSL2 terminal to run these commands

cd ~/.thynk

docker-compose up

Prerequisites

Install Docker Desktop. You can also use your package manager to install Docker Engine & Docker Compose.Setup

- Download the thynk docker-compose.yml file from Github

mkdir ~/.thynk && cd ~/.thynk

wget https://raw.githubusercontent.com/khoj-ai/khoj/master/docker-compose.yml - Configure the environment variables in the

docker-compose.yml- Set

thynk_ADMIN_PASSWORD,thynk_DJANGO_SECRET_KEY(and optionally thethynk_ADMIN_EMAIL) to something secure. This allows you to customize thynk later via the admin panel. - Set

OPENAI_API_KEY,ANTHROPIC_API_KEY, orGEMINI_API_KEYto your API key if you want to use OpenAI, Anthropic or Gemini commercial chat models respectively. - Uncomment

OPENAI_API_BASEto use Ollama running on your host machine. Or set it to the URL of your OpenAI compatible API like vLLM or LMStudio.

- Set

- Start thynk by running the following command in the same directory as your docker-compose.yml file.

cd ~/.thynk

docker-compose up

By default thynk is only accessible on the machine it is running. To access thynk from a remote machine see Remote Access Docs.

Your setup is complete once you see 🌖 thynk is ready to use in the server logs on your terminal.

1. Install Postgres (with PgVector)

thynk uses Postgres DB for all server configuration and to scale to multi-user setups. It uses the pgvector package in Postgres to manage your document embeddings. Both Postgres and pgvector need to be installed for thynk to work.

- MacOS

- Windows

- Linux

- From Source

Install Postgres.app. This comes pre-installed with pgvector and relevant dependencies.

For detailed instructions and troubleshooting, see this section.

- Use the recommended installer.

- Follow instructions to Install PgVector in case you need to manually install it. Windows support is experimental for pgvector currently, so we recommend using Docker.

- Follow instructions to Install Postgres

- Follow instructions to Install PgVector in case you need to manually install it.

2. Create the Khoj database

- MacOS

- Windows

- Linux

createdb khoj -U postgres --password

createdb -U postgres khoj --password

sudo -u postgres createdb khoj --password

Make sure to update the POSTGRES_HOST, POSTGRES_PORT, POSTGRES_USER, POSTGRES_DB or POSTGRES_PASSWORD environment variables to match any customizations in your Postgres configuration.

3. Install Khoj Server

- Make sure python and pip are installed on your machine

- Check llama-cpp-python setup if you hit any llama-cpp issues with the installation

Run the following command in your terminal to install the Khoj server.

- MacOS

- Windows

- Linux

- ARM/M1+

- Intel

CMAKE_ARGS="-DGGML_METAL=on" python -m pip install khoj

python -m pip install khoj

Run the following command in PowerShell on Windows

- CPU

- NVIDIA (CUDA) GPU

- AMD (ROCm) GPU

- VULKAN GPU

# Install Khoj

py -m pip install khoj

# 1. To use NVIDIA (CUDA) GPU

$env:CMAKE_ARGS = "-DGGML_CUDA=on"

# 2. Install Khoj

py -m pip install khoj

# 1. To use AMD (ROCm) GPU

$env:CMAKE_ARGS = "-DGGML_HIPBLAS=on"

# 2. Install Khoj

py -m pip install khoj

# 1. To use VULCAN GPU

$env:CMAKE_ARGS = "-DGGML_VULKAN=on"

# 2. Install Khoj

py -m pip install khoj

- CPU

- NVIDIA (CUDA) GPU

- AMD (ROCm) GPU

- VULKAN GPU

python -m pip install khoj

CMAKE_ARGS="-DGGML_CUDA=on" FORCE_CMAKE=1 python -m pip install khoj

CMAKE_ARGS="-DGGML_HIPBLAS=on" FORCE_CMAKE=1 python -m pip install khoj

CMAKE_ARGS="-DGGML_VULKAN=on" FORCE_CMAKE=1 python -m pip install khoj

4. Start Khoj Server

Run the following command from your terminal to start the Khoj service.

khoj --anonymous-mode

--anonymous-mode allows access to Khoj without requiring login. This is usually fine for local only, single user setups. If you need authentication follow the authentication setup docs.

First Run

On the first run of the above command, you will be prompted to:- Create an admin account with a email and secure password

- Customize the chat models to enable

- Your setup is complete once you see

🌖 Khoj is ready to usein the server logs on your terminal!

To start Khoj automatically in the background use Task scheduler on Windows or Cron on Mac, Linux (e.g. with @reboot khoj)

Use thynk

You can now open the web app at http://localhost:42110 and start interacting!

Nothing else is necessary, but you can customize your setup further by following the steps below.

The offline chat model gets downloaded when you first send a message to it. The download can take a few minutes! Subsequent messages should be faster.

Add Chat Models

Login to the thynk Admin Panel

Go to http://localhost:42110/server/admin and login with the admin credentials you setup during installation.Ensure you are using localhost, not 127.0.0.1, to access the admin panel to avoid the CSRF error.

You may hit this if you try access thynk exposed on a custom domain (e.g. 192.168.12.3 or example.com) or over HTTP. Set the environment variables thynk_DOMAIN=your-domain and thynk_NO_HTTPS=True if required to avoid this error.

Using Safari on Mac? You might not be able to login to the admin panel. Try using Chrome or Firefox instead.

Configure Chat Model

Setup which chat model you'd want to use. thynk supports local and online chat models.- OpenAI

- Anthropic

- Gemini

- Offline

Using Ollama? See the Ollama Integration section for more custom setup instructions.

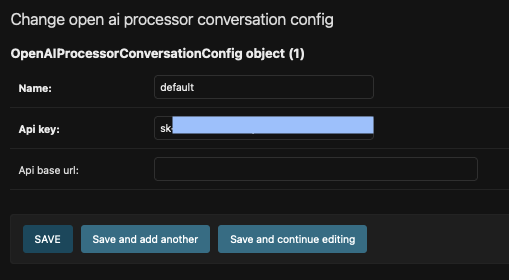

- Create a new AI Model Api in the server admin settings.

- Add your OpenAI API key

- Give the configuration a friendly name like

OpenAI - (Optional) Set the API base URL. It is only relevant if you're using another OpenAI-compatible proxy server like Ollama or LMStudio.

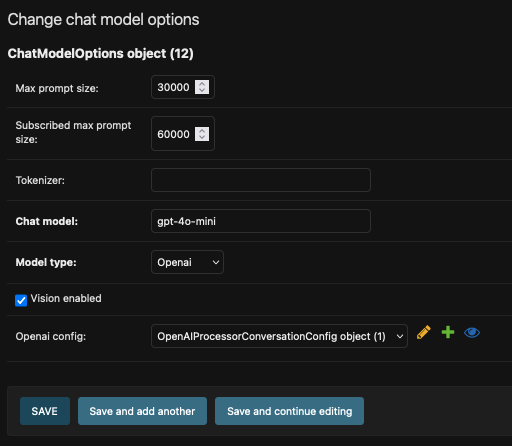

- Create a new chat model

- Set the

chat-modelfield to an OpenAI chat model. Example:gpt-4o. - Make sure to set the

model-typefield toOpenAI. - If your model supports vision, set the

vision enabledfield totrue. This is currently only supported for OpenAI models with vision capabilities. - The

tokenizerandmax-prompt-sizefields are optional. Set them only if you're sure of the tokenizer or token limit for the model you're using. Contact us if you're unsure what to do here.

- Set the

- Create a new AI Model API in the server admin settings.

- Add your Anthropic API key

- Give the configuration a friendly name like

Anthropic. Do not configure the API base url.

- Create a new chat model

- Set the

chat-modelfield to an Anthropic chat model. Example:claude-3-5-sonnet-20240620. - Set the

model-typefield toAnthropic. - Set the

ai model apifield to the Anthropic AI Model API you created in step 1.

- Set the

- Create a new AI Model API in the server admin settings.

- Add your Gemini API key

- Give the configuration a friendly name like

Gemini. Do not configure the API base url.

- Create a new chat model

- Set the

chat-modelfield to a Google Gemini chat model. Example:gemini-1.5-flash. - Set the

model-typefield toGemini. - Set the

ai model apifield to the Gemini AI Model API you created in step 1.

- Set the

Offline chat stays completely private and can work without internet using any open-weights model.

- Minimum 8 GB RAM. Recommend 16Gb VRAM

- Minimum 5 GB of Disk available

- A Nvidia, AMD GPU or a Mac M1+ machine would significantly speed up chat responses

- Get the name of your preferred chat model from HuggingFace. Most GGUF format chat models are supported.

- Open the create chat model page on the admin panel

- Set the

chat-modelfield to the name of your preferred chat model- Make sure the

model-typeis set toOffline

- Make sure the

- Set the newly added chat model as your preferred model in your User chat settings and Server chat settings.

- Restart the thynk server and start chatting with your new offline model!

Set your preferred default chat model in the Default, Advanced fields of your ServerChatSettings.

thynk uses these chat model for all intermediate steps like intent detection, web search etc.

- The

tokenizerandmax-prompt-sizefields are optional. Set them only if you're sure of the tokenizer or token limit for the model you're using. This improves context stuffing. Contact us if you're unsure what to do here. - Only tick the

vision enabledfield for OpenAI models with vision capabilities like gpt-4o. Vision capabilities in other chat models is not currently utilized.

Sync your Knowledge

- You can chat with your notes and documents using thynk.

- thynk can keep your files and folders synced using the thynk Desktop, Obsidian or Emacs clients.

- Your Notion workspace can be directly synced from the web app.

- You can also just drag and drop specific files you want to chat with on the Web app.

Setup thynk Clients

The thynk web app is available by default to chat, search and configure thynk.

You can also install a thynk client to easily access it from Obsidian, Emacs, Whatsapp or your OS and keep your documents synced with Khoj.

Set the host URL on your clients settings page to your thynk server URL. By default, use http://127.0.0.1:42110 or http://localhost:42110. Note that localhost may not work in all cases.

- Desktop

- Emacs

- Obsidian

- Read the thynk Desktop app setup docs.

- Read the thynk Emacs package setup docs.

- Read the thynk Obsidian plugin setup docs.

- Read the thynk Whatsapp app setup docs.

Upgrade

Upgrade Server

- Pip

- Docker

pip install --upgrade thynk

Note: To upgrade to the latest pre-release version of the thynk server run below command

Run the commands below from the same directory where you have your docker-compose.yml file. This will fetch the latest build and upgrade your server.

# Windows users should use their WSL2 terminal to run these commands

cd ~/.thynk # assuming your thynk docker-compose.yml file is here

docker-compose up --build

Upgrade Clients

- Desktop

- Emacs

- Obsidian

- The Desktop app automatically updates to the latest released version on restart.

- You can manually download the latest version from the thynk Website.

- Use your Emacs Package Manager to Upgrade

- See thynk.el package setup for details

- Upgrade via the Community plugins tab on the settings pane in the Obsidian app

- See the thynk plugin setup for details

Uninstall

Uninstall Server

- Pip

- Docker

# uninstall thynk server

pip uninstall thynk

# delete thynk postgres db

dropdb thynk -U postgres

Run the command below from the same directory where you have your docker-compose file. This will remove the server containers, networks, images and volumes.

docker-compose down --volumes

Uninstall Clients

- Desktop

- Emacs

- Obsidian

Uninstall the thynk Desktop client in the standard way from your OS.

Uninstall the thynk Emacs package in the standard way from Emacs.

Uninstall via the Community plugins tab on the settings pane in the Obsidian app

Troubleshoot

Dependency conflict when trying to install thynk python package with pip

- Reason: When conflicting dependency versions are required by thynk vs other python packages installed on your system

- Fix: Install thynk in a python virtual environment using venv or pipx to avoid this dependency conflicts

- Process:

- Install pipx

- Use

pipxto install thynk to avoid dependency conflicts with other python packages.pipx install thynk - Now start

thynkusing the standard steps described earlier

Install fails while building Tokenizer dependency

- Details:

pip install thynkfails while building thetokenizersdependency. Complains about Rust. - Fix: Install Rust to build the tokenizers package. For example on Mac run:

brew install rustup

rustup-init

source ~/.cargo/env - Refer: Issue with Fix for more details

thynk in Docker errors out with "Killed" in error message

- Fix: Increase RAM available to Docker Containers in Docker Settings

- Refer: StackOverflow Solution, Configure Resources on Docker for Mac